By equipping the mine card with profesional sensors and communication positioning system, and developing sensing, positioning,decision-making, planning and control algorithms, the mine card has single vehicle intelligence.Then, it receives the central instruc-tions of the unmanned operation intelligent scheduling system, and completes the automatic driving cycle of loading, transportationand unloading in combination with its own location, surrounding environment and other information.

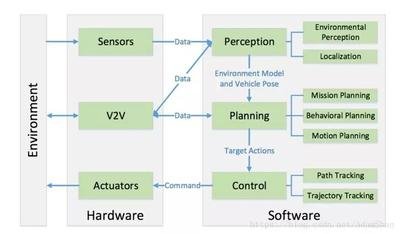

The core of the autonomous driving system can be summarized into three parts: Perception, Planning, and Control. The interaction between these parts and their interaction with vehicle hardware and other vehicles can be represented by the following figure:

Perception refers to the ability of an unmanned driving system to collect information from the environment and extract relevant knowledge from it. Among them, environmental perception refers specifically to the ability to understand the scene of the environment, such as the location of obstacles, detection of road signs/markings, detection of pedestrians and vehicles, and other semantic classification of data. Generally speaking, localization is also a part of perception. Localization is the ability of an unmanned vehicle to determine its position relative to the environment.

Planning is the process of making some purposeful decisions for an unmanned vehicle for a certain goal. For unmanned vehicles, this goal usually means reaching the destination from the departure point while avoiding obstacles, and constantly optimizing the driving trajectory and behavior to ensure the safety and comfort of passengers. The planning layer is usually subdivided into three layers: mission planning, behavioral planning, and motion planning.

Finally, control is the ability of the unmanned vehicle to accurately execute planned actions, which come from higher layers.

01. Perception

Environmental Perception

In order to ensure that the unmanned vehicle understands and grasps the environment, the environmental perception part of the unmanned driving system usually needs to obtain a lot of information about the surrounding environment, including: the location, speed and possible behavior of obstacles, drivable areas, traffic rules, etc. Unmanned vehicles usually obtain this information by integrating data from multiple sensors such as Lidar, Camera, Millimeter Wave Radar, etc. In this section, we will briefly understand the application of Lidar and camera in the perception of unmanned vehicles.

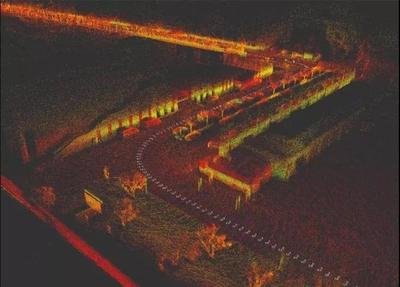

Lidar is a type of device that uses lasers for detection and ranging. It can send millions of light pulses to the environment per second. Its internal structure is a rotating structure, which enables Lidar to build a 3D map of the surrounding environment in real time.

Generally speaking, Lidar rotates and scans the surrounding environment at a speed of about 10Hz. The result of one scan is a 3D map composed of dense points. Each point has (x, y, z) information. This map is called a point cloud graph. As shown in the figure below, it is a point cloud map built using Velodyne VLP-32c Lidar:

LiDAR is still the most important sensor in unmanned driving systems due to its reliability. However, in actual use, LiDAR is not perfect. There are often problems with point clouds being too sparse or even missing some points. It is difficult to identify the pattern of irregular object surfaces using LiDAR. LiDAR cannot be used in situations such as heavy rain.

In order to understand point cloud information, generally speaking, we perform two operations on point cloud data: segmentation and classification. Segmentation is to cluster discrete points in the point cloud into several entities, while classification is to distinguish which category these entities belong to (such as pedestrians, vehicles, and obstacles). Segmentation algorithms can be classified into the following categories:

Edge-based methods, such as gradient filtering, etc.

Region-based methods, which use regional features to cluster neighboring points. The basis for clustering is to use some specified criteria (such as Euclidean distance, surface normal, etc.). This type of method usually selects several seed points in the point cloud first, and then clusters the neighboring points from these seed points using the specified criteria;

Parameter methods, which use predefined models to fit point clouds. Common methods include Random Sample Consensus (RANSAC) and Hough Transform (HT);

Attribute-based methods, which first calculate the attributes of each point and then cluster the points associated with the attributes;

Graph-based methods;

Machine learning-based methods;

After completing the target segmentation of the point cloud, the segmented targets need to be correctly classified. In this link, classification algorithms in machine learning are generally used, such as Support Vector Machine (SVM) to classify the clustered features. In recent years, due to the development of deep learning, the industry has begun to use specially designed convolutional neural networks (Convolutional Neural Networks). Neural Network (CNN) is used to classify three-dimensional point cloud clusters.

However, whether it is the feature extraction-SVM method or the original point cloud-CNN method, due to the low resolution of the lidar point cloud itself, for targets with sparse reflection points (such as pedestrians), point cloud-based classification is not reliable. Therefore, in practice, we often integrate lidar and camera sensors, use the high resolution of the camera to classify the target, use the reliability of lidar to detect obstacles and measure distance, and integrate the advantages of both to complete environmental perception.

In unmanned driving systems, we usually use image vision to complete road detection and detection of targets on the road. Road detection includes detection of road lines (Lane Detection) and detection of drivable areas (Drivable Area Detection); detection of road signs includes detection of other vehicles (Vehicle Detection), pedestrian detection (Pedestrian Detection), detection of traffic signs and signals (Traffic Sign Detection) and other detection and classification of all traffic participants.

Lane detection involves two aspects: the first is to identify the lane. For curved lanes, its curvature can be calculated. The second is to determine the offset of the vehicle itself relative to the lane (i.e., where the unmanned vehicle itself is on the lane). One method is to extract some lane features, including edge features (usually gradients, such as the Sobel operator), lane color features, etc., use polynomials to fit the pixels that we think may be lanes, and then determine the curvature of the lane ahead and the deviation of the vehicle relative to the lane based on the polynomial and the current position of the camera mounted on the vehicle.

One current approach to detecting drivable areas is to use a deep neural network to directly segment the scene, that is, to complete the cutting of the drivable area in the image by training a deep neural network for pixel-by-pixel classification.

The detection and classification of traffic participants currently mainly rely on deep learning models. Commonly used models include two categories:

Deep learning target detection algorithms based on Region Proposal represented by RCNN (RCNN, SPP-NET, Fast-RCNN, Faster-RCNN, etc.);

Deep learning target detection algorithms based on regression methods represented by YOLO (YOLO, SSD, etc.)

02. Positioning

At the perception level of unmanned vehicles, the importance of positioning is self-evident. Unmanned vehicles need to know their exact position relative to the environment. The positioning here cannot have an error of more than 10cm. Imagine if our unmanned vehicle positioning error is 30cm, then this will be a very dangerous unmanned vehicle (for pedestrians and passengers), because the planning and execution layer of unmanned driving does not know that it has an error of 30cm. They still make decisions and control based on the premise of accurate positioning, so the decisions made in certain situations are wrong, causing accidents. It can be seen that unmanned vehicles need high-precision positioning.

The most widely used unmanned vehicle positioning method is the fusion of the Global Positioning System (GPS) and the Inertial Navigation System (Inertial Navigation System) positioning method. Among them, the positioning accuracy of GPS is between tens of meters and centimeters, and the price of high-precision GPS sensors is relatively expensive. The positioning method that integrates GPS/IMU cannot achieve high-precision positioning when the GPS signal is missing or weak, such as underground parking lots, urban areas surrounded by high-rise buildings, etc., so it can only be applied to unmanned driving tasks in some scenarios.

Map-assisted positioning algorithms are another widely used unmanned vehicle positioning algorithm. Simultaneous Localization And Mapping (SLAM) is a representative of this type of algorithm. The goal of SLAM is to build a map and use the map for positioning at the same time. SLAM determines the current vehicle position and the position of the current observed features by using the observed environmental features.

This is a process of estimating the current position using previous priors and current observations. In practice, we usually use Bayesian filters to complete it, specifically including Kalman Filter, Extended Kalman Filter and Particle Filter.

Although SLAM is a research hotspot in the field of robot positioning, there are problems with using SLAM positioning in the actual unmanned vehicle development process. Unlike robots, unmanned vehicles move over long distances and in large open environments. In long-distance movement, as the distance increases, the deviation of SLAM positioning will gradually increase, resulting in positioning failure.

In practice, an effective method for positioning unmanned vehicles is to change the scan matching algorithm in the original SLAM. Specifically, we no longer map while positioning, but use sensors such as lidar to build a point cloud map of the area in advance, and add some “semantics” to the map through programs and manual processing (such as specific markings of lane lines, road networks, the location of traffic lights, traffic rules of the current road section, etc.). This map containing semantics is the high-precision map (HD Map) of our unmanned vehicle.

During actual positioning, the current lidar scan and the pre-built high-precision map are used for point cloud matching to determine the specific location of our unmanned vehicle in the map. This type of method is collectively referred to as scan matching method (Scan Matching). The most common scan matching method is the iterative closest point method (ICP), which completes point cloud registration based on the distance measurement between the current scan and the target scan.

In addition, the Normal Distributions Transform (NDT) is also a common method for point cloud registration, which is based on the point cloud feature histogram to achieve registration. The positioning method based on point cloud registration can also achieve a positioning accuracy of less than 10 centimeters.

Although point cloud registration can give the global positioning of the unmanned vehicle relative to the map, this type of method is too dependent on the high-precision map built in advance, and still needs to be used in conjunction with GPS positioning on open roads. On roads with relatively simple scenes (such as highways), the method of using GPS plus point cloud matching is relatively costly.

03. Planning

Task Planning

The hierarchical structure design of the unmanned driving planning system originated from the DAPRA City Challenge held in 2007. In the competition, most participating teams divided the planning module of the unmanned vehicle into three layers: mission planning, behavior planning and action planning. Among them, mission planning is usually also called path planning or route planning (Route Planning), which is responsible for the top-level path planning, such as the path selection from the starting point to the end point.

We can process our current road system into a directed network graph (Directed Graph Network). This directed network graph can represent the connection between roads, traffic rules, road width and other information. It is essentially the “semantic” part of the high-precision map mentioned in the previous positioning section. This directed network graph is called a route network graph (Route Network Graph), as shown in the following figure:

Each directed edge in such a road network graph is weighted. Therefore, the path planning problem of the unmanned vehicle becomes the process of selecting the optimal (i.e., the least loss) path based on a certain method in the road network graph in order for the vehicle to reach a certain goal (usually from A to B). Then the problem becomes a directed graph search problem. Traditional algorithms such as Dijkstra’s Algorithm and A* Algorithm are mainly used to calculate the optimal path search of discrete graphs and are used to search for the path with the least loss in the road network graph.

Behavior Planning

Behavior planning is sometimes also called decision making. The main task is to make the next decision that the unmanned vehicle should execute according to the goal of the task planning and the current local situation (the location and behavior of other vehicles and pedestrians, the current traffic rules, etc.). This layer can be understood as the co-pilot of the vehicle. He directs the driver to follow or overtake the vehicle based on the goal and the current traffic situation, whether to stop and wait for pedestrians to pass or bypass pedestrians, etc.

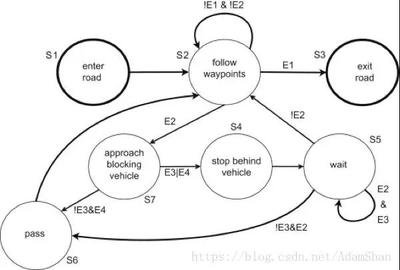

One method of behavior planning is to use a complex finite state machine (FSM) containing a large number of action phrases. The finite state machine starts from a basic state and jumps to different action states according to different driving scenarios, passing the action phrases to the lower action planning layer. The following figure is a simple finite state machine:

As shown in the figure above, each state is a decision on the vehicle’s action. There are certain jump conditions between states, and some states can self-loop (such as the tracking state and waiting state in the figure above). Although it is the mainstream behavior decision-making method currently used in unmanned vehicles, the finite state machine still has great limitations: First, to achieve complex behavior decisions, a large number of states need to be manually designed; the vehicle may fall into a state that the finite state machine has not considered; if the finite state machine is not designed with deadlock protection, the vehicle may even fall into a certain deadlock.

Action Planning

The process of planning a series of actions to achieve a certain purpose (such as avoiding obstacles) is called action planning. Generally speaking, two indicators are usually used to consider the performance of action planning algorithms: computational efficiency and completeness. The so-called computational efficiency is the processing efficiency of completing an action plan. The computational efficiency of the action planning algorithm depends largely on the configuration space. If an action planning algorithm can return a solution in a limited time when the problem has a solution, and can return no solution when there is no solution, then we call the action planning algorithm complete.

Configuration space: A set that defines all possible configurations of a robot. It defines the dimensions in which the robot can move. For the simplest two-dimensional discrete problem, the configuration space is [x, y]. The configuration space of an unmanned vehicle can be very complex, depending on the motion planning algorithm used.

After the concept of configuration space is introduced, the action planning of an unmanned vehicle becomes: given an initial configuration (Start Configuration), a target configuration (Goal Configuration) and several constraints (Constraints), find a series of actions in the configuration space to reach the target configuration. The result of executing these actions is to transfer the unmanned vehicle from the initial configuration to the target configuration while satisfying the constraints.

In the application scenario of unmanned vehicles, the initial configuration is usually the current state of the unmanned vehicle (current position, speed and angular velocity, etc.), the target configuration comes from the upper layer of action planning – the behavior planning layer, and the constraints are the vehicle’s motion restrictions (maximum turning angle, maximum acceleration, etc.).

Obviously, the computational complexity of motion planning in a high-dimensional configuration space is very huge. In order to ensure the integrity of the planning algorithm, we have to search almost all possible paths, which forms the “dimensionality curse” problem in continuous motion planning. The core idea of solving this problem in motion planning is to convert the continuous space model into a discrete model. The specific methods can be summarized into two categories: combinatorial planning and sampling-based planning.

Combinatorial methods of motion planning find paths through continuous configuration spaces without the help of approximations. Due to this property, they can be called exact algorithms. Combinatorial methods find complete solutions by establishing discrete representations of planning problems. For example, in the Darpa Urban Challenge, the motion planning algorithm used by CMU’s unmanned vehicle BOSS first uses a path planner to generate alternative paths and target points (these paths and target points are reachable by fusion dynamics), and then selects the optimal path through an optimization algorithm.

Another discretization method is the Grid Decomposition Approaches. After gridding the configuration space, we can usually use a discrete graph search algorithm (such as A*) to find an optimization path.

Sampling-based methods are widely used due to their probabilistic completeness. The most common algorithms are PRM (Probabilistic Roadmaps), RRT (Rapidly-Exploring Random Tree), and FMT (Fast-Marching Trees). In the application of unmanned vehicles, the state sampling method needs to consider the control constraints of the two states, and also requires a method that can effectively query whether the sampled state and the parent state are reachable. Later, we will introduce State-Lattice Planners, a sampling-based motion planning algorithm, in detail.

04. Control

The control layer is the bottom layer of the unmanned vehicle system. Its task is to implement the actions we have planned, so the evaluation index of the control module is the accuracy of the control. There will be measurements inside the control system. The controller compares the vehicle’s measurements with our expected state output control actions. This process is called feedback control.

Feedback control is widely used in the field of automatic control. The most typical feedback controller is the PID controller (Proportional-Integral-Derivative Controller). The control principle of the PID controller is based on a simple error signal. This error signal consists of three items: the proportion of the error, the integral of the error, and the derivative of the error.

PID control is still the most widely used controller in the industry because of its simple implementation and stable performance. However, as a pure feedback controller, the PID controller has certain problems in the control of unmanned vehicles: the PID controller is based solely on the current error feedback. Due to the delay of the braking mechanism, it will bring delays to our control itself. Since there is no system model inside the PID, the PID cannot model the delay. In order to solve this problem, we introduce a control method based on model prediction.

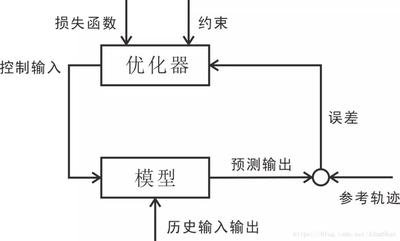

Prediction model: A model that predicts the state of a period of time in the future based on the current state and control input. In unmanned vehicle systems, it usually refers to the kinematic/dynamic model of the vehicle;

Feedback correction: The process of applying feedback correction to the model, so that the predictive control has a strong ability to resist disturbances and overcome system uncertainty.

Rolling optimization: Rolling optimization of the control sequence to obtain the prediction sequence closest to the reference trajectory.

Reference trajectory: The set trajectory.

The figure below shows the basic structure of model predictive control. Since model predictive control is optimized based on the motion model, the control delay problem faced in PID control can be taken into account by building a model, so model predictive control has a high application value in unmanned vehicle control.

05. Summary

In this summary, we outlined the basic structure of the unmanned driving system. The unmanned driving software system is usually divided into three layers: perception, planning and control. To some extent, the unmanned vehicle can be regarded as a “manned robot” in this hierarchical system. Among them, perception specifically includes environmental perception and positioning. The breakthrough of deep learning in recent years has made the perception technology based on images and deep learning play an increasingly important role in environmental perception. With the help of artificial intelligence, we are no longer limited to perceiving obstacles, but gradually become to understand what obstacles are, understand scenes, and even predict the behavior of target obstacles. We will learn more about machine learning and deep learning in the next two chapters.

In actual unmanned vehicle perception, we usually need to integrate multiple measurements such as lidar, camera and millimeter wave radar. Here, we involve fusion algorithms such as Kalman filter, extended Kalman filter and lidar.

There are many positioning methods for unmanned vehicles and robots. The current mainstream methods are the method of using GPS+inertial navigation system fusion and the method based on Lidar point cloud scanning matching. The focus will be on ICP, NDT and other point cloud matching algorithms.

The planning module is also divided into three layers: task planning (also known as path planning), behavior planning and action planning. The task planning method based on the road network and discrete path search algorithm will be introduced later. In behavior planning, we will focus on the application of finite state machines in behavior decision-making. In the action planning algorithm layer, we will focus on the planning method based on sampling.

For the control module of unmanned vehicles, we often use the control method based on model prediction. However, before understanding the model predictive control algorithm, as an understanding of basic feedback control, we have learned about the PID controller. Then we will learn the two simplest vehicle models – kinematic bicycle model and dynamic bicycle model. Finally, we will introduce model predictive control.